AI-Powered Storage Market Size, Share & Forecast | 28.2% CAGR

Global AI-Powered Storage Market Size, Share & Analysis By Offering (Hardware, Software), By Storage System (Network Attached Storage (NAS), Direct Attached Storage (DAS), Storage Area Network (SAN)), By Storage Medium (Solid State Drive (SSD), Hard Disk Drive (HDD),), By End-User (Enterprises, Data Centers) Industry Landscape, Automation Trends & Forecast 2025–2034

Report Overview

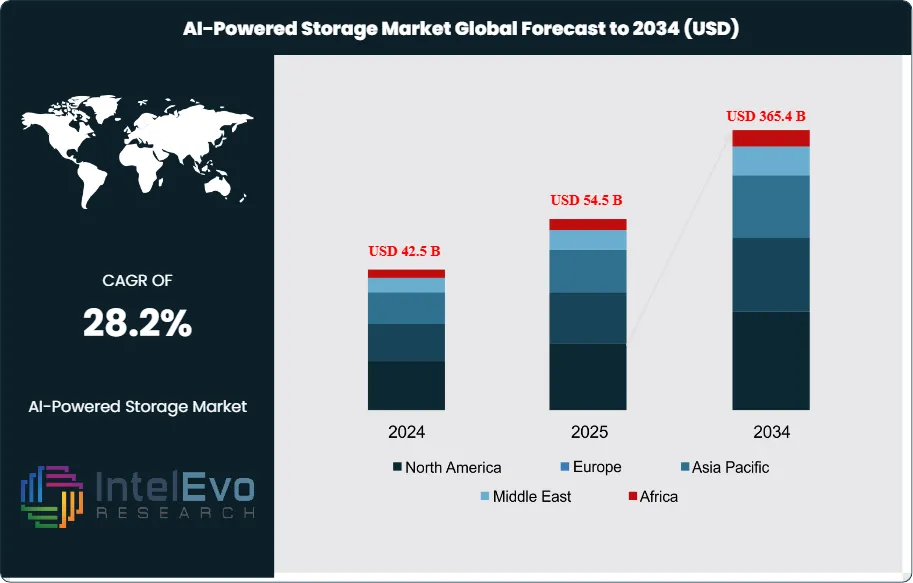

The AI-Powered Storage Market is estimated at USD 42.5 Billion in 2024 and is on track to reach approximately USD 365.4 Billion by 2034, implying a strong compound annual growth rate of about 28.2% over 2025–2034. Growing investment in GPU-centric infrastructure, rising enterprise adoption of generative AI, and the rapid expansion of unstructured data workloads are accelerating market expansion.

Get More Information about this report -

Request Free Sample ReportAs AI pipelines demand higher throughput, lower latency, and policy-driven automation, organizations are increasingly transitioning from traditional storage architectures to intelligent, self-optimizing platforms. The surge in vector databases, RAG (retrieval-augmented generation) systems, and cloud-native AI services is expected to further push demand, making AI-powered storage one of the fastest-growing segments in the data infrastructure landscape.

The adoption of AI-powered storage is growing quickly as companies move from manual data management to smart, automated storage systems. By 2025, organizations will increasingly rely on AI models to classify, tier, place, and retrieve files and objects in real time. This shift will eliminate the need for manual policy adjustments and cut down on operational costs. What started as limited use in analytics and backup setups has expanded into critical AI/ML workflows, where storage speed and microsecond delays directly affect GPU use and training effectiveness.

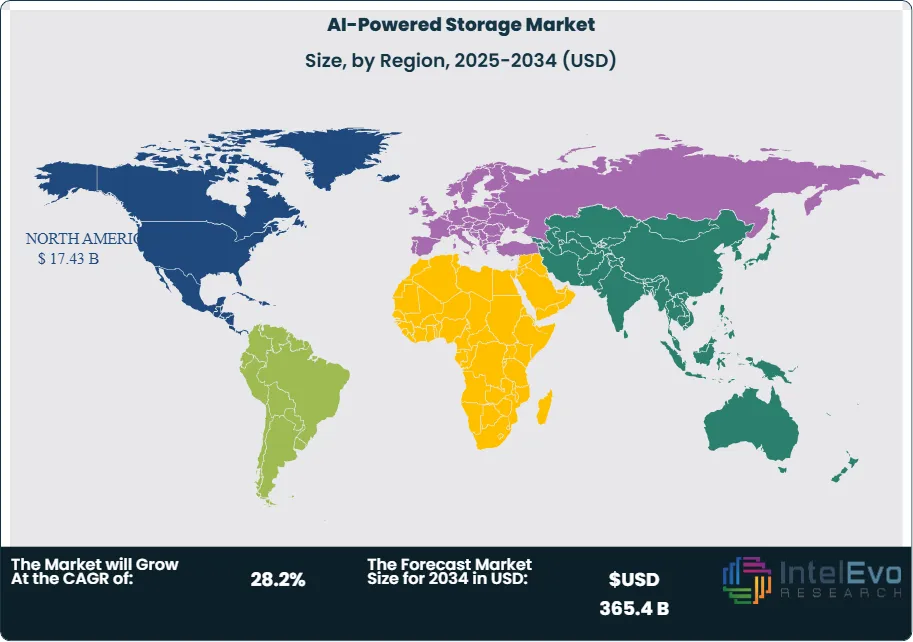

North America is still the center of spending, making up over 40% of global market revenue in 2024. The region benefits from the growth of hyperscalers, wider availability of AI chips, and strong enterprise demand for flash-first storage solutions, NVMe-oF networks, and scalable object storage. These systems now support training processes, inference platforms, and data-heavy applications, enabling real-time analytics, content creation, and streaming tasks. As companies modernize their stateful workloads, AI-powered storage is essential to prevent bottlenecks in distributed data environments.

In 2025, demand trends will be largely influenced by the rapid increase in unstructured data from IoT sensors, application logs, media files, vector embeddings, and multimodal datasets. Companies are tightening their RTO/RPO targets and are increasingly depending on self-managing clusters to predict capacity needs, balance workloads, and detect issues without manual help. Vendors report strong gains in storage efficiency as AI tools optimize caching, placement, and data duplication based on how workloads behave instead of using fixed policies.

On the supply side, advancements in QLC flash endurance, software-defined erasure coding, and smart data reduction methods are greatly improving the cost-per-terabyte calculation. AI-driven caching and placement techniques lessen writing strain and reduce power usage, which is becoming a bigger concern as global data center energy consumption rises. Operators are now tracking watts per terabyte as a key performance indicator, leading to investments in data monitoring and energy-conscious workload management.

Regulations are a major factor in deployment strategies. Data residency rules in the EU, Middle East, and Asia are prompting investments in sovereign cloud regions and on-premises object storage with built-in encryption, smart metadata processing, and privacy-by-default policies. At the same time, cybersecurity threats, especially ransomware, are pushing the adoption of unchangeable snapshots, AI-driven detection of anomalies, and ongoing checks of data retention policies. Managing model drift in AIOps systems has become a significant operational risk, requiring human supervision and clear auditing features.

Hybrid and multi-cloud strategies now lead enterprise plans, featuring Kubernetes-managed stateful services, vector databases for RAG workloads, and automated tiering between high-performance flash and deep object archives. Companies like Pure Storage, NetApp, and Western Digital are heavily investing in AI-native capabilities, predictive analytics, and cross-cloud functionality. The Asia Pacific region is becoming the fastest-growing area due to new data center construction, telco AI initiatives, and increasing sovereign AI projects. Investment is consolidating around platforms that offer open APIs, GPU-aware scheduling, and seamless data transfer—essential features for the next generation of AI-driven businesses.

Key Takeaways

- Market Growth: The market reaches USD 365.4 Billion by 2034 from USD 42.5 Billion in 2024 at a 28.2% CAGR. Expansion tracks surging unstructured data, AI training and inference throughput needs, and real-time analytics in your core applications.

- Component: Hardware led in 2023 with 72% share, reflecting spend on flash arrays, controllers, and performance fabrics to remove I/O bottlenecks. Software and services trail as buyers prioritize latency, throughput, and capacity at scale.

- Media Type: SSD captured 55% share in 2023 as NVMe and QLC adoption lowered latency and improved $/TB for AI pipelines. HDD remains for cold archives, but inference and feature-store workloads favor solid state.

- Driver: Performance-centric architectures are winning. Direct Attached Storage held 35% share in 2023, signaling demand for local, high-bandwidth access that maximizes GPU utilization and shortens model iteration cycles.

- Restraint: Power, cooling, and rack density constraints slow new deployments; energy and facilities costs can represent ~20–25% of storage TCO in dense AI clusters (assumption). Procurement cycles lengthen where data-residency or security certifications add compliance steps.

- Opportunity: If SSD maintains its 55% share, SSD-based systems could approach USD 155–160 Billion of 2033 spend as enterprises shift training and retrieval to flash tiers (assumption). You can capture this by standardizing on NVMe-oF and automated tiering to deep object stores.

- Trend: Hybrid and multi-cloud designs are becoming standard, with AIOps guiding placement, caching, and recovery policies. Vendor actions signal the pivot: Pure Storage at ~USD 6 Billion market cap integrates predictive analytics; NetApp at ~USD 14 Billion advances AI-driven data management; Western Digital at ~USD 16 Billion added AI analytics via the cline acquisition.

- Regional Analysis: North America led with >40% share in 2023, supported by hyperscaler capex and AI chip availability. Asia Pacific emerges as the fast riser on Greenfield data centers and telco AI workloads, likely outpacing the global CAGR from 2024–2033.

Storage System Analysis

DAS remained the leading architecture into 2025 after capturing more than 35% of revenue in 2023. Its direct server attachment delivers low latency and predictable bandwidth, which keeps GPU nodes fed during training and inference. You benefit from simpler deployment and tighter control at the edge and in single-tenant clusters.

NAS and SAN together account for the balance and continue to scale in mixed workloads. NAS supports shared datasets, feature stores, and MLOps pipelines where multiple teams need concurrent access. SAN suits block-intensive training jobs and high IOPS metadata, with NVMe-oF adoption widening in Tier-1 enterprises as you consolidate performance tiers.

Offering Analysis

Hardware retained a dominant 72% share in 2023 and remains the spending anchor in 2025. Buyers prioritize flash arrays, controllers, and accelerators to lift throughput and reduce queue depths on AI nodes. Your capex focuses on performance per watt and rack density as data volumes expand.

Software and subscriptions are growing faster than the base, driven by AIOps, policy automation, and data-reduction suites. Enterprises are standardizing telemetry-led tiering, ransomware detection, and workflow orchestration to shrink manual tickets and raise utilization. You should expect attach rates for software features to climb as fleets scale.

Storage Medium Analysis

SSDs led with over 55% share in 2023 and continue to expand in 2025 on NVMe and QLC adoption. They cut tail latency and support parallel reads for large batch jobs and vector retrieval. Your inference nodes and feature stores gain from consistent microsecond access.

HDDs retain a role in cold archives and deep object stores where $/TB drives decisions. Providers pair SSD front tiers with HDD-backed capacity for model artifacts, checkpoints, and compliance data. This mix preserves performance while containing long-term storage costs.

End-Users Analysis

Enterprises held more than 37% share in 2023 and continue to lead. Data-centric operations in BFSI, healthcare, and automotive push requirements for compliance, uptime, and fast recovery. Your roadmaps favor hybrid deployment and sovereign controls.

Government bodies scale AI programs with strict residency and audit mandates. Cloud service providers drive multi-petabyte expansions tied to GPU clusters and managed AI services. Telecom companies add storage at the edge for 5G analytics and network automation. You will see rising orders for ruggedized nodes and local retention at cell sites.

Regional Analysis

North America accounted for over 40% of 2023 revenue and remains the largest market in 2025. Hyperscaler capex, AI chip supply, and mature channel support sustain high refresh cycles. Your near-term opportunities concentrate in GPU-adjacent storage and NVMe fabrics.

Europe grows on GDPR-aligned architectures and energy-efficient designs. Asia Pacific emerges as the fastest riser on greenfield data centers in China, India, and Southeast Asia and is likely to outpace the 26.5% global CAGR through 2033 (assumption). Latin America and the Middle East & Africa expand from a smaller base, led by telco and government projects that require resilient edge storage and sovereign cloud options.

Get More Information about this report -

Request Free Sample ReportMarket Key Segments

By Offering

- Hardware

- Software

By Storage System

- Network Attached Storage (NAS)

- Direct Attached Storage (DAS)

- Storage Area Network (SAN)

By Storage Medium

- Solid State Drive (SSD)

- Hard Disk Drive (HDD)

- Based on End-Users

- Enterprise

- Government Bodies

- Cloud Service Providers

- Telecom Companies

By Regions

- North America

- Latin America

- East Asia And Pacific

- Sea And South Asia

- Eastern Europe

- Western Europe

- Middle East & Africa

| Report Attribute | Details |

| Market size (2024) | USD 42.5 B |

| Forecast Revenue (2034) | USD 365.4 B |

| CAGR (2024-2034) | 28.2% |

| Historical data | 2018-2023 |

| Base Year For Estimation | 2024 |

| Forecast Period | 2025-2034 |

| Report coverage | Revenue Forecast, Competitive Landscape, Market Dynamics, Growth Factors, Trends and Recent Developments |

| Segments covered | By Offering (Hardware, Software), By Storage System (Network Attached Storage (NAS), Direct Attached Storage (DAS), Storage Area Network (SAN)), By Storage Medium (Solid State Drive (SSD), Hard Disk Drive (HDD),) By End-Users (Enterprise, Government Bodies, Cloud Service Providers, Telecom Companies) |

| Research Methodology |

|

| Regional scope |

|

| Competitive Landscape | NVIDIA Corporation, Hewlett Packard Enterprise (HPE), Samsung Electronics, NetApp, Hitachi, Intel Corporation, Dell Technologies, Micron Technology, IBM, Cisco Systems, Pure Storage, Toshiba, Lenovo, Other key players |

| Customization Scope | Customization for segments, region/country-level will be provided. Moreover, additional customization can be done based on the requirements. |

| Pricing and Purchase Options | Avail customized purchase options to meet your exact research needs. We have three licenses to opt for: Single User License, Multi-User License (Up to 5 Users), Corporate Use License (Unlimited User and Printable PDF). |

Select Licence Type

Connect with our sales team

Why IntelEvoResearch

100%

Customer

Satisfaction

24x7+

Availability - we are always

there when you need us

200+

Fortune 50 Companies trust

IntelEvoResearch

80%

of our reports are exclusive

and first in the industry

100%

more data

and analysis

1000+

reports published

till date